On avoiding band-aid security

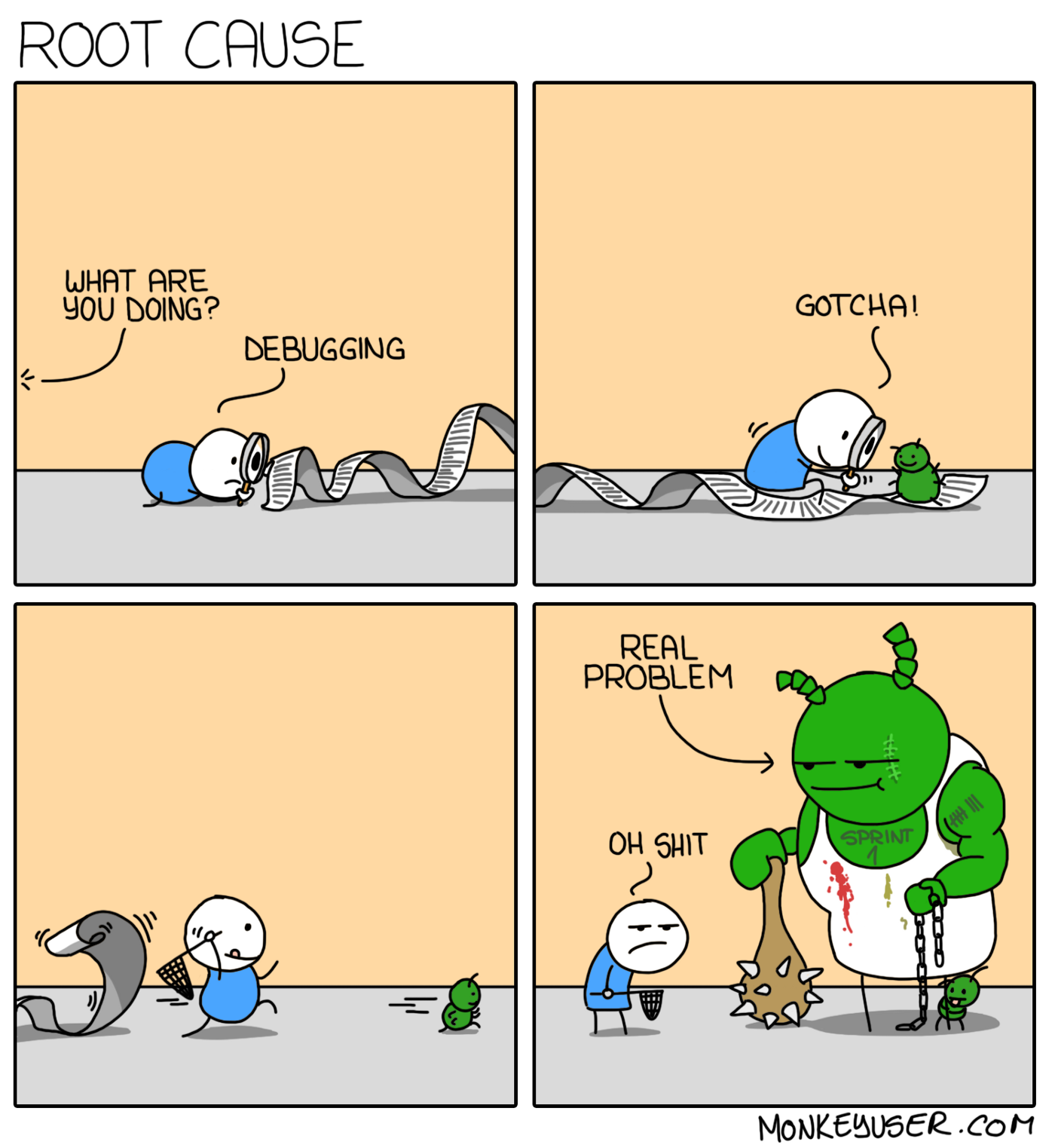

Applying band-aid to open wounds is an important step in preventing further infection, yet it rarely addresses the underlying issue.

Some of my work duties as an executive in a security products company is helping key customers better understand the security challenges they’re going through, and shape the vision for future improvements.

The other day I was talking to a customer, and a familiar narrative surfaced:

“We’ve had a pentest from company X, we’ve covered 100% of detected weaknesses, devised long-term security improvement program based on their recommendations, yet, a year after that, we’ve had 2 incidents. We’re considering to go to court against X, and we’ve had to fire our CTO, because how could he have let this happen to us after all we’ve done.”

Knowing company X, I have no reasons to doubt the depth and quality of the assessment (in fact, they are one of the rare species in the pentesting world — they not only provide “immediate relief” advise, but point out at least some systematic flaws and approaches to systematic mitigation). Yet, while the whole idea of one-time intervention to providing the diagnosis of most risky components of the system is commonplace now, how this diagnosis is used to improve security posture is what rarely gets discussed.

The typical result of any penetration testing is a list of detected vulnerabilities. At best, they come with generic mitigation advice and pointing out general flaws in the system’s design and the company’s technical processes.

When analyzed thoroughly, any large picture of disjointed diagnostic signals from a system would converge into several root causes, that govern system’s unfit behavior. If the picture doesn’t — it’s either not a system at all, or the analysis is wrong. Without going into pitfalls of how typical analysis fails, one can see that any current state of corporate technical infrastructure is governed by processes, humans, machines, and the state of machines that humans inflict upon them (or allow to emerge).

Contemplate this for a second: what if, in your next security planning effort, you would have spent time on identifying the root causes for the last set of security problems detected by penetration testing, and find solutions to them, instead of band-aiding them?

Each measure we all know in improving security posture is an artifact of someone trying to dig deeper into the root causes.

- Closing ports is easy. Changing domain policies and endpoint protection is not too hard. But if your company ends up to fix things more than once — perhaps, the ports were not the problem in the first place?

- Making sure that new services get deployed with proper authentication and firewall rules? Better. But do all the new initiatives follow the same set of rules?

- Making sure that security impact is considered by all teams upon making change to production systems? Sounds like good old security policy work, which slows down business infinitely, yet provides sufficient security posture against most risks, when used right.

- Focus on security awareness training for technical and non-technical teams? Incident drills?

- Lock down everything and enable creation of a new file in shared folder after two written requests? (I kid you not, there are businesses like that).

Unfortunately, the tech industry has done itself more harm than good by creating a barrier of information asymmetry between technical and business executives. “Pick the right team, supply it with resources and it will do good”, “shoo, we don’t need your rigid policies and retarded checklists, we can just fix things”. Security incident over stupid cause? Just fire some of the senior tech executives and find better ones, because you’re not getting to understand what they do anyway.

If root cause for any problems are humans, and humans are flawed in their judgment, then humans are all we’ve left to blame.

Now, let’s see what happens when you find different people to do the same job? Their judgments are flawed in a different way and you’re back to base 1. Band-aid didn’t work and you’re still ignorant to root causes.

Risk management vs band-aiding

What if, as a mental exercise, we would try to reduce (or eliminate) risk causes instead of band-aiding their manifestations? Consider a hypothesis, for a second:

Processes (be it formal, formalized in code or informal culture) are the root cause of any security incident.

Then, contemplate another possibility — that the root cause can be detected and fixed (at least to accommodate to current degree of detected problems).

How can we get there?

It has nothing to do with technology, in fact. It requires three things:

- Challenge problematic status-quo to get to the root causes. Taking aspirin to cure the pain is good, but if the pain comes from the tumor pressure inside your brain, you better challenge status-quo before you die. Short-term relief doesn’t yield long-term health. Finding more compromises and a better painkiller typically costs as much as coming up with the solution to root causes.

- Every vulnerability, weakness, and resulting incident damage is a manifestation of architectural or process deficiency (or absence of any proper process in place). We live in a world where our systems run hundreds of pieces of software other people wrote. Blaming their authors for writing not-100%-secure code isn’t going to make your life better. Treating any piece of code as an attack vector and building defenses around it according to trust level is. Treating deficiencies properly yields more long-term benefit than any advanced threat mitigation technology altogether.

- Ability to deal with the root causes for all the deficiencies makes most economically efficient mitigations to risks, yet requires a good focus on the root causes along with immediate cure to short-term threats. Hospitals stabilize critical patients with painkillers to get them into a cureable state, not to make them feel good.

Finding someone or something to blame is not equal to finding the root cause.

Blaming either masks the problem by hoping that replacement of system’s element will change the flawed system or creates self-sustained conflicts and power struggles in management. Neither of those solves the problem and, in fact, becomes another way of living with the contradictory status-quo by attaching scapegoat positions to it.

The false boundaries to root cause analysis is status-quo and helplessness against accepted contradictions.

The real boundaries to which you should push root cause analysis to is what you can change if you had good faith that it’s going to have a real impact on a problem.

Because, if you do it thoroughly, the results will surprise you.

The root cause analysis 101 (and 202, and 303)

If there’s something I’ve learned about root cause analysis over years, it’s that methodology is obvious yet people rarely apply it well. It’s not about asking “Why” 5 times (although it helps), and not about combining results in the cohesive narrative (although it’s almost necessary).

Most of the problems have to do with the way we think and perceive problems, not with the problems themselves:

Root causes tend to challenge our assumptions about reality (because the root cause for many problems is a conflict of our worldview and reality, or between various opinions on worldviews).

We’re quite exhausted trying to figure out the band-aid solution, and are happy to repeat the exercise over and over again because fixing the obvious problem is a good compromise when you repress the bigger problem behind it and accept it. Yet, as pictured above, it’s a futile effort.

How to seek root causes?

So how should we try to think about root causes to avoid masking the bigger problems and finding good solutions to them?

- Root causes are entities and processes, not people and events. If your system wasn’t built to deal with poor decisions and mistakes — it’s a system’s problem. If it wasn’t budgeted to be dealt with — it’s a system’s problem again. If the random unpredictable event wasn’t the part of the plan covered with redundancy or defense in depth — it’s a system’s problem again.

- The typical straw-man argument often heard here is that no battle plan ever survives contact with the enemy. However, the locally-correct definition of a system here is that of “harmonious set of tools and processes providing sufficient control over things your business does control, and sufficient reactive capability for known/unknown unknowns”. Building up proper capabilities decreases the chances for miserable failure.

- Good identification of root causes ends up with a few causes that govern most effects. Perfect identification typically results in not just solutions to current problems, but valuable side-effects (by eliminating deficiencies you haven’t yet put your attention to, or by helping you recognize other opportunities).

- First side-effect of the point above is that you don’t need all the data to detect most of root causes — if they are governing the effects you can easily see, it’s likely that they will govern the rest of the effects. This assumption, when used properly, makes high-quality penetration testing as efficient in improving security posture, as a whole top-down audit — just requires some digging to root causes of problems detected.

- Each identified root cause and its effects have sufficient actionable steps to mitigate and evident points of resistance — these points are a product of previous compromises scarred into management processes.

- Combination of measures to address root causes does not imply revamping your infrastructure and processes everywhere — but, by aligning a few causes, most problematic effects will be eliminated.

- While the effects are typical to many infrastructures and organizations around, the root causes typically tend to be fairly organization-specific.

It’s important to remember one thing —all problems will never go away. Some of them are inherent to the current state of the business, which is a real constraint to change, some of them are a function of the current evolution of technology, some — of the current evolution of human race. You will always have the poor human judgment to some degree, you will always have buggy software in unexpected ways. You can’t have a bigger budget than it is reasonable for business survival, neither you can have less infrastructure mess than your current team is able to maintain. However, both available resources and acceptable mayhem is always far away from the levels set out by our expectations, if you challenge them hard enough:

there always is a room for radical improvement if you understand causal relationships and laws that govern the system of your business.

Practicalities

Software development and infrastructure management have finally landed to a shared frame of mind — it’s all development and maintenance, carried out in different proportions by different departments, but working best when the responsibility and approaches are shared.

One of the root causes for trouble most organizations share is so-called temporal discounting when thinking about risk. It always feels more reasonable to quickly band-aid another vulnerability instead of detecting root causes and eliminating them. In reality, these two are not contradictions (remember about eliminating contradictions from the paragraph above?) — you can and should both band-aid the problem and dig for its root cause. Otherwise, just as in any technical debt, patching without addressing the debt leads to horrors. Time preference is a function of people not having sufficient certainty for tomorrow’s challenges — but, in this case, tomorrow’s challenges are driven by today’s temporal discounting.

There are risks that can be avoided by enforcing technical processes and solutions. Infrastructure engineering and cybersecurity industries are happy to offer you many, given that you understand the risks and implications to pick appropriate ones.

There are risks that can be minimized by adjusting the processes — any development lifecycle can be armored with SSDLC, no matter how agile, any quality attribute viable to business (including security) is a subject to measurement, that should inform processes, alter them and improve them in self-sustaining learning loop.

But only under one important prerequisite.

Look for the root cause, Luke!

Whenever you read reports of 10 obvious vulnerabilities in your penetration testing report, don’t stop asking internally “why?” until you see how the 10 vulnerabilities converge into a few reasons. And address these reasons.

The long-term impact of removing the opportunity to introduce new vulnerabilities into the system is way more meaningful than just sitting and obsessively using duct-tape everywhere.

Here’s a scared duct-taped penguin for no apparent reason — you’ve made it to the end of this blog post.

Here’s a scared duct-taped penguin for no apparent reason — you’ve made it to the end of this blog post.